Do you remember your first A / B testing Email? I remember. I felt both anxious and afraid at the same time. Now I had to put into practice what I had learned in college.

I knew I needed a large enough sample size to run the email test. I knew I needed to test long enough to get statistically significant results.

I was not sure how large the "large enough" e-mail sample was and what the "long enough" was for the duration of the check.

Search in Google gave me many answers.

It turns out that I was not alone: these are the two most common questions. A / B tests that we receive from clients. And the reason the typical Google search responses aren't that useful is because they talk about A / B testing in a perfect, theoretical, non-marketing world.

So, I decided to do some research to help you answer this question in practice. At the end of this post, you will be able to learn how to determine the correct sample size and timeline for the next email submission.

Содержание | Быстрая навигация

Theory versus reality of sample size and time in A / B tests.

In theory, to determine the winner between Variation A and Variation B, you have to wait until you have enough results. This way you can see if there is a statistically significant difference between the two.

Depending on your company, sample size, and how you perform A / B testing, statistically significant results can occur within hours, days, or weeks. You just have to post it until you get these results. In theory, you shouldn't limit the time for collecting results.

For many A / B tests, waiting is not a problem. Testing a header copy on a landing page? It's cool to wait a month for results.

But on email, waiting can be a problem - for several practical reasons.

Each email has a limited audience.

Unlike a landing page, where you can keep collecting new audience members over time, once you send an A / B testing email, that's it. You cannot add more people to this A / B test.

You need to figure out how to squeeze the most juice out of your emails.

This usually requires:

- send an A / B test to the smallest part of your list needed to get statistically significant results,

- choose a winner,

- send the winning option to the rest of the email list.

You manipulate at least a few emails a week.

If you spend too much time collecting results, you can skip sending your next email. This can have worse consequences than sending a non-statistically significant winner email to one segment of your database.

Sending email is often timed.

Your marketing emails are optimized for delivery at specific times of day, whether your emails keep up with the time of launching a new campaign or landing in your recipient's inbox at the time they would like to receive it.

If you wait until your email is completely statistically significant, you may be missing out on timeliness and relevance, which could prejudice the purpose of sending your email in the first place.

This is why A / B email testing programs have a built in "sync" option. At the end of this time period, if none of the results are statistically significant, one option that you choose in advance will be sent to the rest of the list.

This way, you can still run A / B tests in email. You can also bypass your email marketing planning requirements and ensure people always receive timely content.

Thus, in order to perform A / B tests in email while optimizing your submissions for the best results, you must take both sample size and time into account.

How to determine your sample size and testing timeline e-mail newsletter?

Ok, now let's move on to the part you were waiting for:

How do you actually calculate the sample size and time required for your next electronic A / B test?

As I mentioned above, each A / B test by email can only be sent to a limited audience, so you need to figure out how to maximize its results.

To do this, you need to determine the smallest part of your total list needed to obtain statistically significant results. This is how you calculate it.

Evaluate if you have enough email contacts in your list.

To A / B test a sample of your list, you need to have a large enough list size, at least 1000 contacts. If there are fewer of them, the proportion of your list required for an A / B test to get statistically significant results gets bigger and bigger.

For example, to get statistically significant results from a small list, you might have to check your list's 85% or 95%. And the results for those people on your list who have not yet been tested will be so small that you could just send half of your list with one version of email and the other half with another, and then measure the difference.

Your results may not be statistically significant at the end of it all, but at least you are gathering knowledge as you expand your lists to have over 1000 contacts.

Use a calculator.

This is what the calculator looks like when you open it:

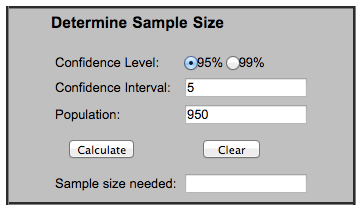

Enter the confidence level, confidence interval, and population into the instrument.

Population: Your sample represents a large group of people. It is called your population.

In email, your population is the number of people on your list who can receive emails. Not the number of people you sent emails to.

To calculate the population, I would look at the last three to five emails you sent to this list and average the total number of emails delivered.

Use an average when calculating the sample size as the total number of delivered emails will fluctuate.

Confidence interval: You may have heard that this is called the "error margin." Many polls use this, including political polls. This is the range of results that you can expect from this A / B test when done with the full population.

For example, if you have an interval of 5, and 60% of your control sample, you can be sure that in the interval 55%, that is, 60 minus 5, to 65%, 60 plus 5, this email also opened.

The larger the interval you choose, the more confident you can be that the true actions of the population have been accounted for in that interval. At the same time, longer intervals will give you less definite results. This is the compromise you must make in your emails.

For our purposes, don't get too carried away with confidence intervals. When you are just starting out with A / B tests, I would recommend choosing a smaller interval, for example, around 5.

Level reliability. This indicates how confident you are that the sample results lie within the above confidence interval. The lower the percentage, the less you can be confident in the results. The higher the percentage, the more people you will need in your sample.

Example:

Let's pretend we are submitting our first A / B test. We have 1,000 people on our list, and the delivery rate is 95%. We want to be at 95% confident that our winning email metrics are within the 5-point interval of our population metrics.

Here's what we've added to the tool:

- Population : 950 people;

- Trust level : 95%;

- Confidence interval : 5.

Click Calculate.

TA-dah! The calculator will lay out your sample size. In our example, our sample size is 274.

This is the size of one of your options. So for sending email, if you have one control and one option, you need to double that number. If you had a control and two options, you would triple it. And so on.

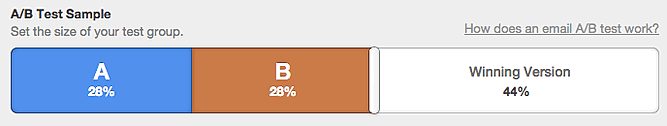

Depending on your email program, you may need to calculate the sample size percentage for all email.

HubSpot customers, when you do A / B testing via email, you need to choose the percentage of contacts to send the list to, not just the sample size.

To do this, you need to divide the number in your sample by the total number of contacts in your list. This is what the math looks like using the examples above:

274/1000 = 27,4%

This means that each sample and your control and your variation must be sent 27-28% to your audience. In other words, roughly 55% of your total list.

And it's all! You should be prepared to choose a shipping time.

How to choose the right timing for your A / B test?

So this is where we come into the reality of sending email. You should figure out how long to complete your A / B email test before submitting the winning version to the rest of your list.

The timing aspect is slightly less statistically driven. But you should definitely use past data. This will help you make better decisions. Here's how you can do it.

Analytics.

Find out when clicks, email openings, or other success rates start to drop. Look at your past emails to figure this out.

For example, what percentage of the total number of clicks did you get on the first day? If you find that you get 70% of your clicks in the first 24 hours, and then 5% every day after that, it makes sense to limit your email check window to 24 hours.

In this scenario, you probably want to leave your time window at 24 hours. After 24 hours, your email program should tell you if they can determine a statistically significant winner.

Then it's up to you what to do next. If you have a large enough sample size and find a statistically significant winner at the end of the testing period, many email marketing programs will automatically and immediately send the winning option.

If your sample size is large enough and there is no statistically significant winner after the test period, email marketing tools may also let you automatically submit a variation of your choice.

If you have a smaller sample size or are doing a 50/50 A / B test, when to send the next email based on the results of the original email is entirely up to you.

If you have time limits on when to send the winning email to the rest of the list, find out how late you can send the winner. If it is not out of date or does not affect other emails, please send as late as possible.

For example, if you sent an email at 6:00 pm ET for an urgent sale that ends at midnight ET, you might not want to determine the winner of the A / B test at 11:00 pm. Instead, you want to send email closer to 8 or 9 pm. This will give people not involved in A / B testing enough time to act via email.

And that's pretty much it guys. After performing these calculations and examining your data, you should be much better equipped to email A / B tests. They are statistically significant enough to help you make real progress in email marketing.

Based on materials from the site: https://blog.hubspot.com.

❤️ How else can you test email?

A / B tests consist of sending multiple subject lines to check which one generates more openings. More sophisticated A / B testing can test completely different email templates against each other to see which generates the most clicks.

⏩ How detailed can you check your email?

All mail items can be tested.

❤️ What to look for when checking the text of mailings?

On the length of the texts, word order, content, the presence of an appeal to the recipient by name or lack thereof, the style and tone of the texts, etc.

⏩ How to choose types of tests for email?

Define goals and consider test options to achieve them. Choose the most convenient and efficient one for you.

⏩ When should you not use an A / B test?

4 reasons not to test.

If there is no significant traffic yet.

If there is no valid theory.

If the risk of immediate action is low.

When testing safety cannot be ensured.

⏩ What is one of the common mistakes when doing A / B tests?

One of the common mistakes in A / B testing is doing a split test too early. For example, if you are launching a new OptinMonster campaign, you should wait a little while the data is available to create the baseline.

⏩ How to do A / B test in Excel?

Statistical calculation of the power of the A / B test result in Excel.

Choose whether the test is 1-tailed or 2-tailed (in most cases, if you run A / B tests using the main tools available on the market, this should be 2-tailed).

Select the confidence level (90% / 95% / 99%).

Add visitor numbers for control and variation.